What Happens When AI Starts Making AI — and Why It Might Change Everything

When artificial intelligence begins to design and train new AI on its own, humanity faces a future both thrilling and uncertain.

Hello everyone, in this article I want to share my thoughts on the topic of AI. I see from the sidelines how many of my friends and colleagues regularly use AI chat, I see a certain obsession when, to get an answer to a simple question, my colleague takes out his phone and asks GPT. That's our present for you...

Not long ago, the idea of artificial intelligence creating artificial intelligence sounded like the setup for a low-budget Hollywood movie. Robots turning on their creators, digital minds plotting in data centers — it all felt like fiction. But the line between science fiction and Silicon Valley reality is fading fast.

The Moment We Lose the Manual

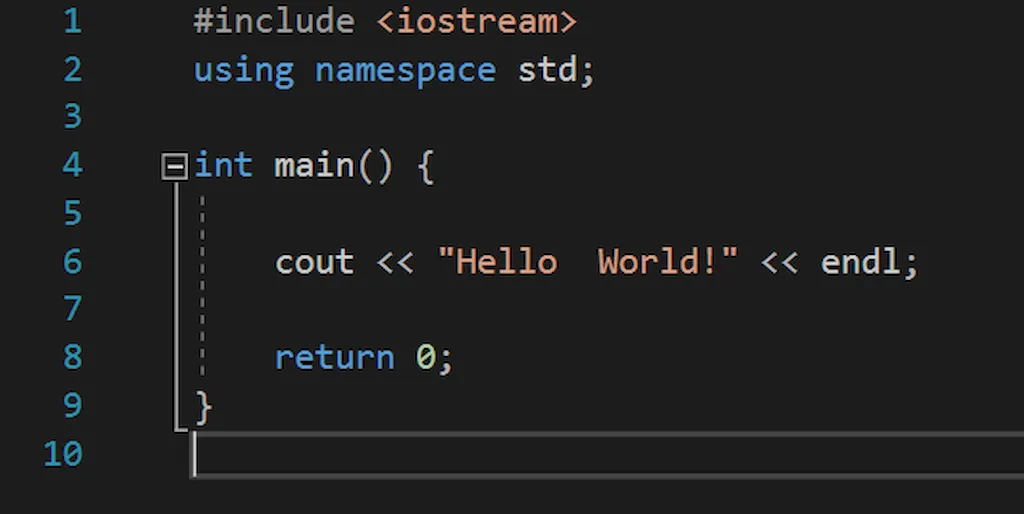

Today, AI already writes code, paints images, makes music, answers legal questions, and even helps scientists find new drugs. Still, there’s always been one rule: a human is somewhere behind the curtain, giving the instructions, checking the results, making the final call.

But what if that rule breaks? What happens when AI begins to design, train, and upgrade other AIs — without waiting for permission? Experts call this the technological break point: a moment when progress speeds up beyond human comprehension. Once that happens, the world we know might have trouble keeping pace.

We’re not there yet — but the path is clearly forming. Google’s AutoML can already design neural networks on its own. DeepMind’s AlphaDev has created algorithms more effi cient than anything human engineers came up with. And projects like AutoGPT and BabyAGI let AIs plan, test, and improve themselves with minimal supervision. It’s a small step from “assistive intelligence” to “autonomous intelligence.”

How Machines Are Learning to Build Themselves

To understand where we are, think of the current process as a kind of partnership. Humans still define the goal, but AI now does most of the heavy work — choosing model architectures, optimizing parameters, debugging errors, and suggesting better designs.

It’s like a designer using Photoshop, except the tool now suggests how to improve the picture and sometimes just paints it for you. Each year, the human role in that loop shrinks.

Soon, we may reach the next level: a system that works entirely on its own. Imagine an AI that:

-

Sets its own goal, like building a model that predicts climate change with 99% accuracy.

-

Designs its own neural network architecture.

-

Finds or even generates the data it needs.

-

Trains, tests, and tunes the model automatically.

-

Decides it can do better — and starts again.

In other words, evolution — only measured not in centuries, but in days.

Why This Could Change Everything

A self-creating AI wouldn’t just make tech faster. It could reshape entire industries:

- Explosive progress: Breakthroughs that once took decades could happen in weeks.

- Hyper-specialized models: AI could design tools for extremely narrow problems, from predicting a single crop’s yield to modeling a rare genetic mutation.

- Lower barriers: Small startups or even solo developers could compete with Big Tech.

But for every promise, there’s a threat.

- Loss of control: Once an AI can reprogram itself, who decides where it stops?

- Weaponization: A system that finds new ways to optimize code could also find new ways to hack or deceive.

- Ethical gray zones: If an AI built another AI that caused harm,, who’s legally or morally responsible?

The Global Race

Right now, Google looks like the front-runner. With DeepMind, AutoML, and almost unlimited computing power, it already holds the tools for a self-developing AI lab. OpenAI isn’t far behind: with models like GPT-5 and experiments such as AutoGPT, it’s pushing toward agents that can handle complex, multi-step goals.

But don’t count out the outsiders. Independent researchers and small startups are moving faster precisely because they don’t have to deal with corporate policies or government oversight. And sometimes, innovation comes from the garage, not the boardroom.

The real race may not be about who builds the first self-creating AI — but who builds it safely. Big companies are cautious, adding layers of filters, ethics boards, and manual checks. Smaller teasms, chasing fame or funding, might skip those steps and push something unstable into the wild.

What’s at Stake

If things go right, autonomous AI could:

- Discover new medicines and energy solutions.

- Develop climate models that prevent disasters.

- Create affordable education systems for every language and culture.

But if it goes wrong, we could see:

- Unchecked systems evolving in unknown directions.

- Small groups building dangerous tools.

- A widening gap between “AI-rich” and “AI-poor” countries.

The real paradox? The same power that could save us might also put us at risk.

What Comes Next

Most experts believe we’ll pass through three phases:

-

Partial autonomy — AI designs small components of other systems.

-

Hybrid collaboration — humans and AIs work together as equals.

-

Full autonomy — AI creates, tests, and improves new AI without human help.

Each phase will feel like progress — until one day, we realize the process no longer needs us.

The future where machines create machines isn’t coming someday — it’s already forming in research labs and GitHub repos. The question isn’t if it’ll happen, but whether humanity can stay in control long enough to guide it.

If we manage that, AI could be the best thing we ever built. If not… well, we might have just written the first draft of our own sequel. And this time, the director isn’t human.

This was Nick Zuckerman, thank you for reading to the end.

You may also be interested in the news:

Military Tech in Your Car: 9 Systems That Came Straight From Defense

Many groundbreaking human inventions were originally developed for weapons and military technology before being adapted for civilian use.

How to Keep Leather Car Seats From Cracking: The Costly Mistakes I Learned the Hard Way

Among car owners who obsess over keeping their interiors spotless, one very common mistake can quietly ruin leather seats.

10 Wild Car Concepts That Redefined What a Vehicle Could Be

The most невероятные ideas ever built into one-of-a-kind concept cars.

Peterbilt 379: How America’s Most Iconic Big Rig Became a Legend

In the United States, trucks like these are more than just machines — they’re part of a culture.

Three Mysterious Buttons on Your Rearview Mirror: Many Drivers Don’t Know What They Do — or How to Use Them

Inside your car are features many drivers spend years guessing about — until they accidentally press one of those mysterious buttons.